SleepKit is an AI Development Kit (ADK) that allows developers to easily Establish and deploy actual-time rest-checking models on Ambiq's family of extremely-lower power SoCs. SleepKit explores a number of snooze similar duties which includes sleep staging, and sleep apnea detection. The package incorporates a variety of datasets, function sets, successful model architectures, and a number of pre-qualified models. The target from the models is always to outperform regular, hand-crafted algorithms with effective AI models that still in shape throughout the stringent resource constraints of embedded devices.

8MB of SRAM, the Apollo4 has more than plenty of compute and storage to manage intricate algorithms and neural networks when displaying vivid, crystal-apparent, and sleek graphics. If added memory is required, exterior memory is supported as a result of Ambiq’s multi-bit SPI and eMMC interfaces.

You'll be able to see it as a means to make calculations like whether a small household need to be priced at ten thousand pounds, or what sort of temperature is awAIting from the forthcoming weekend.

This submit describes four assignments that share a standard topic of boosting or using generative models, a branch of unsupervised learning approaches in device learning.

Some endpoints are deployed in remote places and may only have limited or periodic connectivity. For this reason, the proper processing capabilities should be made available in the right location.

IoT endpoint device manufacturers can hope unequalled power performance to produce far more capable gadgets that procedure AI/ML functions much better than ahead of.

IDC’s study highlights that getting a digital business needs a strategic concentrate on experience orchestration. By purchasing systems and procedures that improve everyday functions and interactions, corporations can elevate their electronic maturity and jump out from the gang.

” DeepMind promises that RETRO’s databases is simpler to filter for damaging language than a monolithic black-box model, but it hasn't entirely examined this. Much more insight may perhaps originate from the BigScience initiative, a consortium set up by AI company Hugging Encounter, which consists of all-around five hundred researchers—numerous from significant tech firms—volunteering their time to build and analyze an open up-source language model.

Regardless that printf will usually not be used after the feature is released, neuralSPOT features power-knowledgeable printf guidance so the debug-method power utilization is near to the final 1.

After collected, it processes the audio by extracting melscale spectograms, and passes those into a Tensorflow Lite for Microcontrollers model for inference. After invoking the model, the code procedures The end result and prints the almost certainly search phrase out within the SWO debug interface. Optionally, it will dump the collected audio to your Computer by means of a USB cable using RPC.

So as to get yourself a glimpse into the way forward for AI and recognize the muse of AI models, everyone using an interest in the chances of this rapidly-developing area need to know its basics. Discover our in depth Artificial Intelligence Syllabus for any deep dive into AI Technologies.

Prompt: Quite a few huge wooly mammoths method treading through a snowy meadow, their extended wooly fur evenly blows during the wind since they walk, snow coated trees and remarkable snow capped mountains in the gap, mid afternoon mild with wispy clouds plus a Sunshine significant in the space makes a heat glow, the small digicam perspective is breathtaking capturing the massive furry mammal with beautiful images, depth of field.

It can be tempting to deal with optimizing inference: it is actually compute, memory, and energy intensive, and a really noticeable 'optimization goal'. Inside the context of complete program optimization, even so, inference is often a little slice of All round power usage.

This great amount of data is to choose from and also to a sizable extent easily obtainable—both inside the physical earth of atoms or perhaps the digital environment of bits. The one difficult part should be to produce models and algorithms that may analyze Cool wearable tech and have an understanding of this treasure trove of facts.

Accelerating the Development of Optimized AI Features with Ambiq’s neuralSPOT

Ambiq’s neuralSPOT® is an open-source AI developer-focused SDK designed for our latest Apollo4 Plus system-on-chip (SoC) family. neuralSPOT provides an on-ramp to the rapid development of AI features for our customers’ AI applications and products. Included with neuralSPOT are Ambiq-optimized libraries, tools, and examples to help jumpstart AI-focused applications.

UNDERSTANDING NEURALSPOT VIA THE BASIC TENSORFLOW EXAMPLE

Often, the best way to ramp up on a new software library is through a comprehensive example – this is why neuralSPOt includes basic_tf_stub, an illustrative example that leverages many of neuralSPOT’s features.

In this article, we walk through the example block-by-block, using it as a guide to building AI features using neuralSPOT.

Ambiq's Vice President of Artificial Intelligence, Carlos Morales, went on CNBC Street Signs Asia to discuss the power consumption of AI and trends in endpoint devices.

Since 2010, Ambiq has been a leader in ultra-low power semiconductors that enable endpoint devices with more data-driven and AI-capable features while dropping the energy requirements up to 10X lower. They do this with the Apollo 3.5 blue plus processor patented Subthreshold Power Optimized Technology (SPOT ®) platform.

Computer inferencing is complex, and for endpoint AI to become practical, these devices have to drop from megawatts of power to microwatts. This is where Ambiq has the power to change industries such as healthcare, agriculture, and Industrial IoT.

Ambiq Designs Low-Power for Next Gen Endpoint Devices

Ambiq’s VP of Architecture and Product Planning, Dan Cermak, joins the ipXchange team at CES to discuss how manufacturers can improve their products with ultra-low power. As technology becomes more sophisticated, energy consumption continues to grow. Here Dan outlines how Ambiq stays ahead of the curve by planning for energy requirements 5 years in advance.

Ambiq’s VP of Architecture and Product Planning at Embedded World 2024

Ambiq specializes in ultra-low-power SoC's designed to make intelligent battery-powered endpoint solutions a reality. These days, just about every endpoint device incorporates AI features, including anomaly detection, speech-driven user interfaces, audio event detection and classification, and health monitoring.

Ambiq's ultra low power, high-performance platforms are ideal for implementing this class of AI features, and we at Ambiq are dedicated to making implementation as easy as possible by offering open-source developer-centric toolkits, software libraries, and reference models to accelerate AI feature development.

NEURALSPOT - BECAUSE AI IS HARD ENOUGH

neuralSPOT is an AI developer-focused SDK in the true sense of the word: it includes everything you need to get your AI model onto Ambiq’s platform. You’ll find libraries for talking to sensors, managing SoC peripherals, and controlling power and memory configurations, along with tools for easily debugging your model from your laptop or PC, and examples that tie it all together.

Facebook | Linkedin | Twitter | YouTube

Edward Furlong Then & Now!

Edward Furlong Then & Now! Anthony Michael Hall Then & Now!

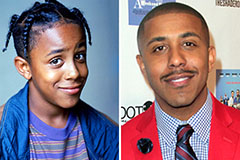

Anthony Michael Hall Then & Now! Marques Houston Then & Now!

Marques Houston Then & Now! Freddie Prinze Jr. Then & Now!

Freddie Prinze Jr. Then & Now! Jaclyn Smith Then & Now!

Jaclyn Smith Then & Now!